Latency and Jitter test

Like most products, the number of new features requested for Ostinato is long. The effort to implement these also vary widely. Earlier this year I decided to implement one big feature for the next Ostinato version and I zeroed in on latency and jitter measurement.

The reason why this is big is because this requires timestamping packets which is an infra piece not yet supported in Ostinato. Also, doing this means changing the very core of the highly optimized packet transmission code in Ostinato which has not any major changes for almost 8-10 years (for good reason!) and any changes here need to be done carefully.

I estimated about a month to get this done for the base code after which I would have to do the same for Turbo which is a different codebase.

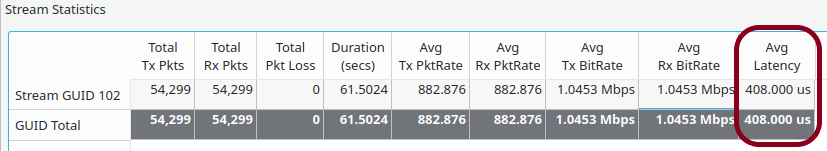

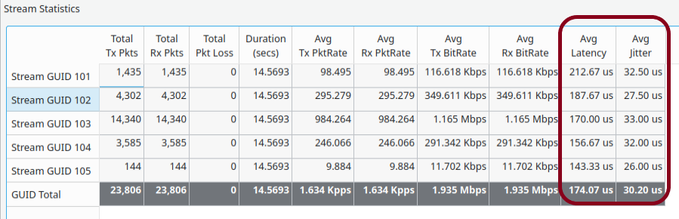

From a requirements point of view, I decided to implement this as a per-stream metric similar to packet loss, traffic rates and other existing per-stream stats. No additional configuration is needed beyond the basic stream stats configuration.

Basic questions

Before starting design and development, I needed to answer some fundamental questions.

Q. As a software traffic generator, would software (kernel/user level) timestamps be acceptable?

ping/iperf timing figures seem to be acceptable for a lot of users which are also based on software timestamps, so I made an assumption this will be the case for Ostinato too.

Q. Should TX timestamps be stored within the packet or only locally at the TX agent?

I decided to go with the latter.

Q. Should all TX packets be timestamped?

No, that’s likely to be too much additional cost.

Q. In that case, how often should TX packets be timestamped?

RFC 2544 suggests 30 sec while the commercial hardware traffic generators likely do it for every packet. 30 seconds seemed too long for me and 1 second too short (again from a cost point of view), so I arbitrarily chose a 5 seconds interval

Design and challenges

To identify timestamped packets, I added a TimingTag (TTAG) to the packet signature used for stream stats. The TTAG is essentially a unique identifier inserted on-the-fly in a packet every 5 seconds to correlate TX and RX timestamps for the same packet.

For these TTAG packets the TCP/UDP checksum would be incorrect unless we fix that as well on-the-fly. I spent a lot of time experimenting and finding ways to do this with least cost at Tx time only to discover much later that my approach would fail for interleaved streams. So, for the time being, I decided to live with incorrect checksums for TTAG packets - these are sent out only once every 5 seconds.

However I made sure sure that these packets do get counted at the RX end even if they have an incorrect TCP/UDP checksum.

The other challenge was that in case of multiple streams - in sequential or interlaved transmit mode - the one TTAG packet every 5 seconds should be for each TX stream. The stream rates, duration and packet counts are all user configured. After several misteps and a few different prototypes, I landed on something that worked for both modes and was performant.

On the RX side, we have to capture and parse each packet to identify if it is a TTAG packet and if so, record the RX timestamps. Fortunately, we already capture all stream stats signature packets and parse them. I just extended the code to parse the TTAG also and record the Tx timestamp.

Now that we have both TX and RX timestamps, we can diff the two and calculate the latency on a per-stream basis - we keeping doing this over the entire duration of the transmit to get the average latency.

And finally the protobuf API additions and GUI code to include the latency value from agent to controller as part of stream stats and to display the same in the stream stats window.

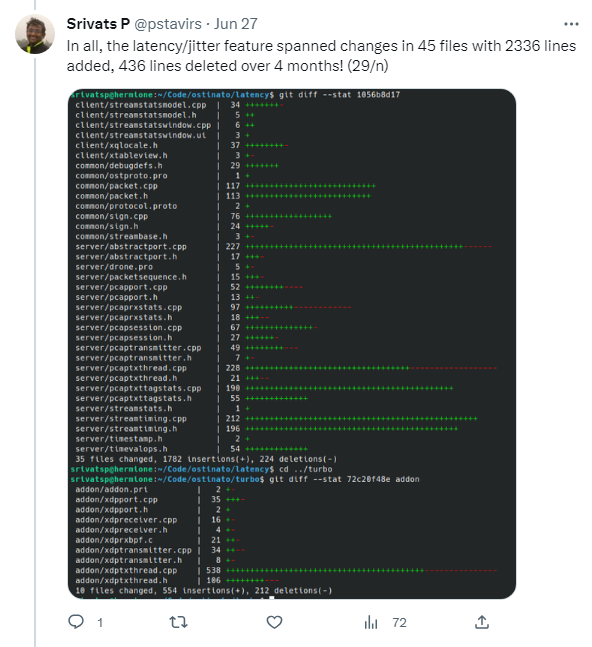

Next I had to do everything for the high-speed Turbo code base which is completely different than the base code. Moreover it has two completely different TX algos - one for low-speed high accuracy and another for high-speed high-throughput and changes were required for both.

All the while making these changes, I had to stop and refactor existing code multiple times to fit in the new code. And at other times rewrite the new code to be more optimal so as not to affect performance of Tx rates. Of course, I introduced a few bugs while making these changes - hopefully, I’ve caught and fixed ‘em all!

With latency available, calculating jitter was straight-forward.

In the end, it took more than 2.5K lines of code spread over 45 files and almost 4 months. So much for my estimate of 1 month!

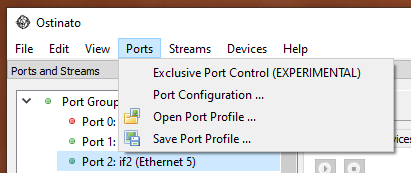

As you may have guessed from the above screenshot, this feature was built in public with regular updates over the 4 months posted to a twitter thread.

Follow me on Twitter for more such build in public features in the future.

Per-stream latency and jitter will be available in the next Ostinato version - v1.3.

Leave a Comment